Advancements in Artificial Intelligence and Machine learning techniques, making it reach a point when we’re trying to unravel more complex problems, it has conventionally transpired over time that the collected data isn’t as disparate as it should be and with time it becomes delicate to find image data. This has created a problem, a problem that enables models not to be trained well enough for being scripts. These problems have been addressed with the Anime (GANs) to bring about intermediatory inactive space.

Design Flow

Generative Adversarial Networks (GANs) are a class of Neural Networks, which can be used for modelling the sustaining data distribution from a given set of unlabelled samples. GAN comprises of two contending networks, a creator, and a discriminator. The creator learns to collude an arbitrary vector to the high dimensional data similar as image, while the discriminator network learns to distinguish between the real and the synthesized samples. Eventually, this competition leads to the generation of realistic samples, which are undiscriminating to mortal spectators. These models most significant in ultramodern AI exploration with enormous implicit, realizing Generalized AI.

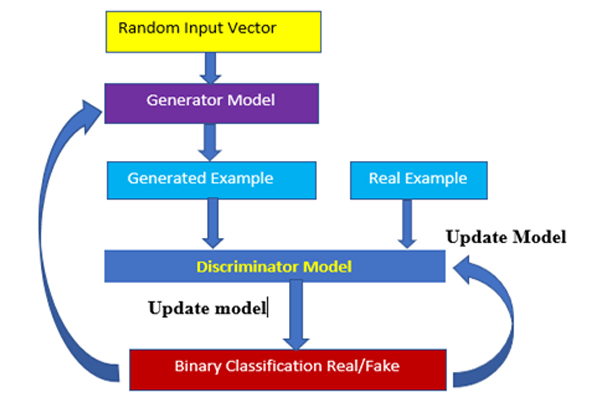

Fig:1 Design flow of Anime (GANs)

The model demonstrates the consecutive inpouring of processing pathway of Anime GANs. The discriminator takes an image as input and tries to classify it as” real” or” generated”. Being an extension to GANs, Style GANs furnish a wider and added interactive approach to the data being trained and the way the creator model traditionally works. In Style GAN, we replace the creator model with intermediate inoperative space in each point in the creators to control the affair of the model and the variation in sources at each point of the network formed.

Generative Model is an unsupervised machine learning task and thus, it becomes veritably pivotal to train the model in such a way that we’ve information and enough number of vectors to produce new data and induce new faces for operation data. GANs are employed with two battering neural networks to train a computer to learn the nature of a dataset well enough to induce satisfying fakes. One of these Neural Networks generates fakes (the creator), and the other tries to classify which images are fake (the discriminator), ameliorate over time by contending against each other.

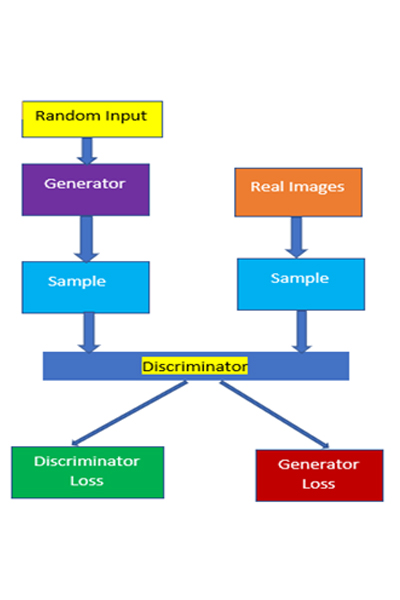

Discriminator network loss is a function of creator network quality Loss shown in Fig2 is high for the discriminator if it gets wisecracked by the creator’s fake images. In the training phase, discriminator and creator networks are successionally trained, intending to ameliorate performance for both.

Fig:2 Discriminator and generator Loss of Anime [GANs] (Ref. https://anuragjoc.blogspot.com/2021/03/)

In generative hostile networks, two networks train and rival against each other, influencing in concerted extemporization. The reason for combining both networks is that there’s no feedback on the creator’s labours. The only companion is if the discriminator accepts the creator’s affair. The creator consists of complication transpose layers followed by batch normalization and a dense ReLU activation function for up slice. Dense ReLU functions are one attempt to fix the problem of dying in the activation function. rather of the function being zero when x< 0, a dense ReLU will rather have a small negative pitch.

More works with near ideal delicacy could be done to produce mortal faces and thereby be helpful in training algorithms that demand natural faces, but we need to maintain sequestration and thus we’d avoid collecting real data of humans and hence the models could be more intelligent and hence also reduce demarcation, especially in the fashion assiduity.

Prof. Neelapala Anil Kumar

Department of Electronics and Communication Engineering (ECE)

Alliance College of Engineering & Design

Alliance University